(1) Regarding trajectory prediction, I am currently debugging the training code to address the nan value appearing in the loss value.

孙愉亚 のすべての投稿

週報(SUN YUYA)

(1) I am learning the traditional optical method to compute camera or background motion.

(2) I am still making a GUI to compare multiple Super Mario RL agents.

週報(SUN YUYA)

(1) I am still training the model after exchanging the tracking datasets with the trajectory datasets.

(2) I am still making a GUI to compare multiple Super Mario RL agents.

週報(SUN YUYA)

(1) I am training the model after exchanging the tracking datasets with the trajectory datasets.

(3) I am still making a GUI to compare multiple Super Mario RL agents.

週報(SUN YUYA)

(1) I am training the model after exchanging the tracking datasets with the trajectory datasets.

(2) I am reading the paper about dense optical flow, trying to find a simple method to compute background motion.

Paper Link: https://arxiv.org/pdf/2305.12998v2

(3) I am still making a GUI to compare multiple Super Mario RL agents.

週報(SUN YUYA)

(1) To help the trackers identify similar objects, I am still modifying the tracking datasets as the trajectory datasets before training the model.

(2) Try to find a simple method to compute camera motion.

(3) I am making a GUI to compare multiple Super Mario.

週報(SUN YUYA)

(1) To help the trackers identify similar objects, I am still modifying the tracking datasets as the trajectory datasets before training the model.

(2) Try to find a simple method to compute camera motion.

(3) I am making a GUI to compare multiple Super Mario.

週報(SUN YUYA)

(1) I am still studying how to apply trajectory prediction to long-term object tracking.

(2) About the the display of achievements in August, I am finding some of my previous projects, involving object tracking, deep reinforcement learning, large language models, and optical character recognition.

週報(SUN YUYA)

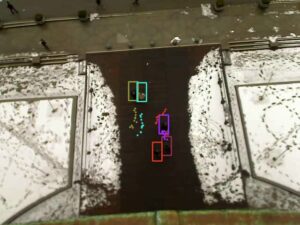

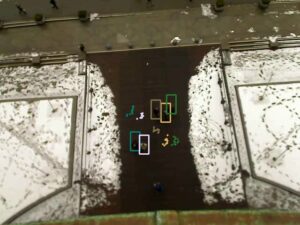

This week, I continued to conduct experiments on trajectory prediction.

I conducted visualization research on the code for the paper “Social-STGCNN: A Social Spatio-Temporal Graph Convolutional Neural

Network for Human Trajectory Prediction”.

The initial predicted trajectory is very normal.

But in the later stage, the predicted trajectory will lag behind the actual position of pedestrians.

And the predicted trajectory always appears in the middle of the image, which is also a problem.

I will find the reason to solve the problems.

週報(SUN YUYA)

(1)Recently, I have been reading papers on trajectory prediction, conducting experiments, and trying to understand the code.