This week I continue conducting experiments about long term tracking. Except for the experiments, I still read the papers about long term object tracking.

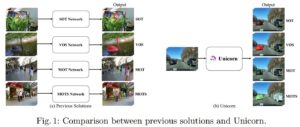

Among these papers, there are two trackers are the practical, namely Unicorn and Mixformer2. The Unicorn is a tracker with global detection, which can locate the target object on the whole frame. The Mixformer2 are a high-speed and accurate tracker, which can be taken as local tracker. If combining the advantage of two trackers, we can get a excellent long term tracker.

The evaluation of Unicorn and Mixformer2 on the open benchmark (LaSOT, TrackingNet and GOT-10k) are as follows:

Unicorn: 10 fps.

Mixformer2: 100fps

trackingnet | AUC | OP50 | OP75 | Precision | Norm Precision |

Unicorn | 95.24 | 100.00 | 100.00 | 100.00 | 100.00 |

got10k | AUC | OP50 | OP75 | Precision | Norm Precision |

Unicorn | 82.17 | 92.17 | 82.61 | 80.01 | 91.05 |

lasot | AUC | OP50 | OP75 | Precision | Norm Precision |

Unicorn | 67.49 | 77.64 | 64.81 | 73.00 | 75.52 |

lasot | AUC | OP50 | OP75 | Precision | Norm Precision |

MixFormerDeit-small | 60.75 | 71.73 | 53.66 | 60.91 | 68.98 |

lasot | AUC | OP50 | OP75 | Precision | Norm Precision |

MixFormerDeit-big | 69.95 | 81.65 | 68.83 | 75.11 | 79.69 |

The new papers are as follows:

I change my mind that I should read more new papers instead of conducting experiments in haste. The specific analysis of papers are as follows:

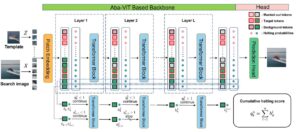

1. Adaptive and Background-Aware Vision Transformer for Real-Time UAV Tracking

The paper’s purpose:

To slove the problem that traditional CNN is too slow.

Contributions:

(1) The paper proposes a framework, where feature learning and template-search coupling are integrated into an efficient one-stream ViT to avoid an extra heavy relation modeling module.

(2)The proposed Aba-ViT exploits an adaptive and background-aware token computation method to reduce inference time.

(3)This approach adaptively discards tokens based on learned halting probabilities, which a priori are higher for background tokens than target ones.

(4) Very Fast ! 180 fps!

Personal Evaluation:

Too fast! And the paper provide the code. We can let it act as main tracker.

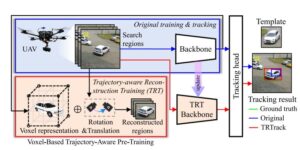

2. Boosting UAV Tracking With Voxel-Based Trajectory-Aware Pre-Training

The paper’s purpose:

(1)To slove the problem that the siamese tracker was trapped when facing multiple views of object in consecutive frames.

(2)The general image-level pretrained backbone can overfit to holistic representations, causing the misalignment to learn object-level properties in UAV tracking.

Contributions:

(1) Fully exploit the stereoscopic representation for UAV tracking. Specifically, a novel pre-training paradigm method is proposed.

(2) Through trajectory-aware reconstruction training (TRT), the capability of the backbone to extract stereoscopic structure feature is strengthened without any parameter increment.

Personal Evaluation:

No code. The paper is related to 3D tracking.

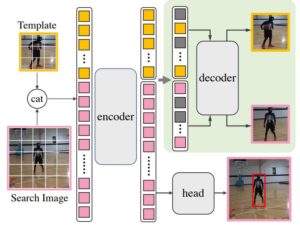

3. Compact Transformer Tracker with Correlative Masked Modeling

The paper’s purpose:

(1) Proving that the traditional selfattention structure is sufficient for information aggregation, and structural adaption is unnecessary.

Contributions:

(1) The paper attaches a lightweight correlative masked decoder which reconstructs the original template and search image from the corresponding masked tokens.

(2) The structure is very simple.

Personal Evaluation:

Release code. The evaluation on benchmark is very high. Nice paper. But the analysis of network is beyond my ability.

4. Continuity-Aware Latent Inter frame Information Mining for Reliable UAV Tracking

The paper’s purpose:

(1) Mainly focuses on explicit information to improve tracking performance, ignoring potential interframe connections.

Contributions:

(1) A network can generate highly-effective latent frame between two adjacent frames.

(2) Fully explore continuity-aware spatial-temporal information.

Personal Evaluation:

Release code. The innovation points are very innovative.

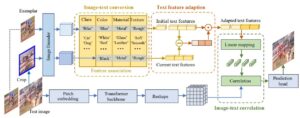

5. CiteTracker: Correlating Image and Text for Visual Tracking

The paper’s purpose:

(1) track targets with drastic variations.

Contributions:

(1) Developing a text generation module to convert the target image patch into a descriptive text containing its class and attribute information, providing a comprehensive reference point for the target.

(2) A dynamic description module is designed to adapt to target variations for more effective target representation.

Personal Evaluation:

Release a half code. The innovation points are very innovative.But I don’t know if it works.

6. CoTracker: It is Better to Track Together

The paper’s purpose:

(1) Tracking points individually ignores the strong correlation that can exist between the points, for instance, because they belong to the same physical object, potentially harming performance.

Contributions:

(1) In this paper, we thus propose CoTracker, an architecture that jointly tracks multiple points throughout an entire video.

(2)It is based on a transformer network that models the correlation of different points in time via specialised attention layers. The transformer iteratively updates an estimate of several trajectories.

Personal Evaluation:

Release code. Very important. The innovation points are very innovative.The paper is related to optical flow and tragectory. We may use this paper to find the of motion of environment.

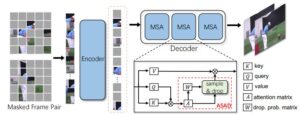

7. DropMAE: Masked Auto-encoders with Spatial-Attention Dropout for Tracking Tasks

The paper’s purpose:

(1) MAE (masked autoencoder ) heavily relies on spatial cues while ignoring temporal relations for frame reconstruction.

Contributions:

(1) DropMAE adaptively performs spatial-attention dropout in the frame reconstruction to facilitate temporal correspondence learning in videos.

(2) Improving the pre-training process.

Personal Evaluation:

Release code. The innovation points are very innovative. I can’t understand the paper.

9. Efficient Training for Visual Tracking with Deformable Transformer

The paper’s purpose:

(1) In order to solve the problems that resource-intensive, requiring prolonged GPU training hours and incurring high GFLOPs during inference, the paper want to improve inefficient training methods and convolution based target heads.

Contributions:

(1)Our framework utilizes an efficient encoder-decoder structure where the deformable transformer decoder acting as a target head, achieves higher sparsity than traditional convolution heads, resulting in decreased GFLOPs.

(2)For training, we introduce a novel one-to-many label assignment and an auxiliary denoising technique, significantly accelerating model’s convergence.

Personal Evaluation:

No code.

9. Efficient Visual Tracking with Exemplar Transformers

The paper’s purpose:

(1) However, in the pursuit of increased tracking performance, runtime is often hindered.

(2) Furthermore, efficient tracking architectures have received

surprisingly little attention.

Contributions:

(1) E.T.Track, our visual tracker that incorporates Exemplar Transformer modules, runs at 47 FPS on a CPU.

(2)In this paper, we introduce the Exemplar Transformer, a transformer module utilizing a single instance level attention layer for realtime visual object tracking.

Personal Evaluation:

Release code. Very fast and the architecture is very simple. But the evaluation seems not very good.

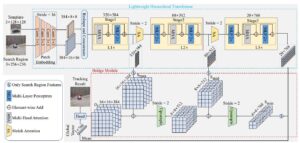

11. Exploring Lightweight Hierarchical Vision Transformers for Efficient Visual Tracking

The paper’s purpose:

(1) Throw the backbone away.

Contributions:

(1) bridge the gap between single-branch network and discriminative models.

(2) bolsters the tracking robustness in the presence of distractors via bidirectional cycle tracking verification.

Personal Evaluation:

No code. Old innovation point.

10. Exploiting Image-Related Inductive Biases in Single-Branch Visual Tracking

The paper’s purpose:

(1) However, existing trackers are hampered by low speed, limiting their applicability on devices with limited computational power

Contributions:

(1) The central idea of HiT is the Bridge Module, which bridges the gap between modern lightweight transformers and the tracking framework.

(2)We also propose a novel dual-image position encoding technique that simultaneously encodes the position information of both the search region and template images.

Personal Evaluation:

Release a half code. Old innovation point.

61 fps is not sufficient.

11. Generalized Relation Modeling for Transformer Tracking

The paper’s purpose:

(1) Existing onestream trackers always let the template interact with all parts inside the search region throughout all the encoder layers.

Contributions:

(1) Enabling more flexible relation modeling by selecting appropriate search tokens to interact with template token.

(2)An attention masking strategy and the Gumbel-Softmax technique are introduced to facilitate the parallel computation and end-to-end learning of the token division module.

Personal Evaluation:

Release code. The innovation point is about structure of network.

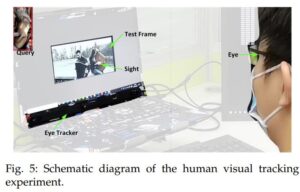

12. Global Instance Tracking: Locating Target More Like Humans

The paper’s purpose:

(1)The massive gap indicates that researches only measure tracking performance rather than intelligence.

(2) Occlusion and fast motion.

Contributions:

(1) In this article, we first propose the global instance tracking (GIT) task, which is supposed to search an arbitrary user-specified instance in a video without any assumptions about camera or motion consistency, to model the human visual tracking ability.

(2) Whereafter, we construct a high-quality and large-scale benchmark VideoCube to create a challenging environment.

(3) Finally, we design a scientific evaluation procedure using human capabilities as the baseline to judge tracking intelligence.

(4) Additionally, we provide an online platform with toolkit and an updated leaderboard.

Personal Evaluation:

A new dataset benchmark. Maybe it’s very important.

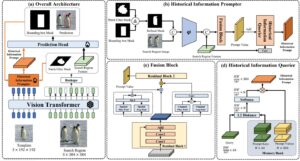

13. Learning Historical Status Prompt for Accurate and Robust Visual Tracking

The paper’s purpose:

(1) However, they struggle to make prediction when the target appearance changes due to the limited historical information introduced by roughly cropping the current search region based on the predicted result of previous frame.

(2) The incapacity to integrate abundant and effective historical information.

Contributions:

(1) HIP is a plug-and-play module that make full use of search region features to introduce historical appearance information.

Personal Evaluation:

No code. How to produce mask in tracking ?

14.Leveraging the Power of Data Augmentation for Transformer-based Tracking

The paper’s purpose:

(1) Previous works focus on designing effective architectures suited

for tracking, but ignore that data augmentation is equally crucial for training a well-performing model.

Contributions:

(1) We optimize existing random cropping via a dynamic search radius mechanism and simulation for boundary samples.

(2) We propose a token-level feature mixing augmentation strategy, which enables the model against challenges like background interference.

Personal Evaluation:

No code. It’s diffcult to add a new tracking toolkit.

15.Lightweight Full-Convolutional Siamese Tracker

The paper’s purpose:

(1) The current tracking model is too big.

Contributions:

(1) LightFC employs a novel efficient cross-correlation module (ECM) and a novel efficient rep-center head (ERH) to enhance the nonlinear expressiveness of the convolutional tracking pipeline.

(2) Additionally, it references successful factors of current lightweight trackers and introduces skip-connections and reuse of search area features.

Personal Evaluation:

Release code. Another fast tracker. Worthing reading.

16.LiteTrack: Layer Pruning with Asynchronous Feature Extraction for Lightweight and Efficient Visual Tracking

The paper’s purpose:

(1) Too big and too slow.

Contributions:

The main innovations of LiteTrack encompass:

(1) Asynchronous feature extraction and interaction between the template and search region for better feature fushion and cutting redundant computation.

(2) Pruning encoder layers from a heavy tracker to refine the balance between performance and speed.

Personal Evaluation:

Release code. Another fast tracker. Worthing reading.

17.MixFormerV2: Efficient Fully Transformer Tracking

The paper’s purpose:

(1) Too slow.

Contributions:

(1) Our key design is to introduce four special prediction tokens and concatenate them with the tokens from target template and search areas.

(2)Then, we apply the unified transformer backbone on these mixed token sequence.

(3) Based on them, we can easily predict the tracking box and estimate its confidence score through simple MLP heads.

Personal Evaluation:

Release code. Another fast tracker. Worthing reading.

18.Mobile Vision Transformer-based Visual Object Tracking

The paper’s purpose:

(1) Too slow and too big.

Contributions:

The main innovations of LiteTrack encompass:

(1) We propose a lightweight, accurate, and fast tracking algorithm using Mobile Vision Transformers (MobileViT) as the backbone for the first time.

(2) We also present a novel approach of fusing the template and search region representations in the MobileViT backbone, thereby generating superior feature encoding for target localization.

Personal Evaluation:

Release code. Another fast tracker. Worthing reading.

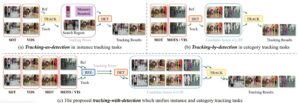

19.OmniTracker: Unifying Object Tracking by Tracking-with-Detection

The paper’s purpose:

(1) Combing SOT and MOT.

Contributions:

(1) We propose a novel tracking-with detection paradigm, where tracking supplements appearance priors for detection and detection provides tracking with candidate bounding boxes for association.

(2)Equipped with such a design, a unified tracking model, OmniTracker, is further presented to resolve all the tracking tasks with a fully shared network architecture, model weights, and

inference pipeline.

Personal Evaluation:

No code. Useless.

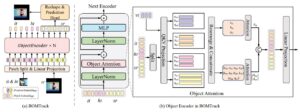

20.Robust Object Modeling for Visual Tracking

The paper’s purpose:

(1) However, separate template learning lacks communication between the template and search regions, which brings difficulty in extracting discriminative target-oriented features.

(2)On the other hand, interactive template learning produces hybrid template features, which may introduce potential distractors to the template via the cluttered search regions

Contributions:

(1)To enjoy the merits of both methods, we propose a robust object modeling framework for visual tracking (ROMTrack), which simultaneously models the inherent template and the hybrid template features.

(2)To further enhance robustness, we present novel variation tokens to depict the ever-changing appearance of target objects.

Personal Evaluation:

Release code. There is too much nonsense in this paper. Normal paper.

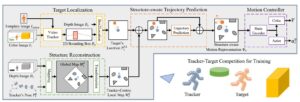

21.RSPT: Reconstruct Surroundings and Predict Trajectories

for Generalizable Active Object Tracking

The paper’s purpose:

(1) However, building a generalizable active tracker that works robustly across different scenarios remains a challenge, especially in unstructured environments with cluttered obstacles and diverse layouts.

Contributions:

(1) To address this challenge, we present RSPT, a framework that forms a structure-aware motion representation by Reconstructing the Surroundings and Predicting the target Trajectory.

Personal Evaluation:

No code. Don’t know how to exploit this paper.

22.Segment and Track Anything

The paper’s purpose:

(1) To precisely and effectively segment and track any object in a video.

Contributions:

(1)Additionally, SAM-Track employs multimodal interaction methods that enable users to select multiple objects in videos for tracking, corresponding to their specific requirements.

(2) As a result, SAM-Track can be used across an array of fields, ranging from drone technology, autonomous driving, medical imaging, augmented reality, to biological analysis.

Personal Evaluation:

Release code. I don’t need the tracker with too much function.

23.Separable Self and Mixed Attention Transformers for Efficient Object Tracking

The paper’s purpose:

(1) However, the transformer-based models are underutilized for Siamese lightweight tracking due to the computational complexity of their attention blocks.

Contributions:

(1)This paper proposes an efficient self and mixed attention transformerbased architecture for lightweight tracking.

(2)Our prediction head performs global contextual modeling of the encoded features by leveraging efficient self-attention blocks

for robust target state estimation.

Personal Evaluation:

Release code. Normal paper.

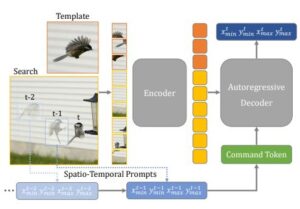

24. SeqTrack: Sequence to Sequence Learning for Visual Object Tracking

The paper’s purpose:

(1) It casts visual tracking as a sequence generation problem, which predicts object bounding boxes in an autoregressive fashion.

Contributions:

(1)The encoder extracts visual features with a bidirectional transformer.

(2)While the decoder generates a sequence of bounding box values autoregressively with a causal transformer.

Personal Evaluation:

Release code. Worth reading.

25.SiamTHN: Siamese Target Highlight Network for Visual Tracking

The paper’s purpose:

(1)The majority of siamese network based trackers now in use treat each channel in the feature maps generated by the backbone network equally, making the similarity response map sensitive to background influence and hence challenging to focus on the target region.

(2) Additionally, there are no structural links between the classification and regression branches in these trackers, and the two branches are optimized separately during training.

(3)Therefore, there is a misalignment between the classification and regression branches, which results in less accurate tracking results.

Contributions:

(1) In this paper, a Target Highlight Module is proposed to help the generated similarity response maps to be more focused on the target region.

(2) To reduce the misalignment and produce more precise tracking

results, we propose a corrective loss to train the model.

Personal Evaluation:

No code. Normal paper.

26.SOTVerse: A User-defined Task Space of Single Object Tracking

The paper’s purpose:

(1)The former causes existing datasets can not be exploited comprehensively, while the latter neglects challenging factors

in the evaluation process.

Contributions:

(1)We first propose a 3E Paradigm to describe tasks by three components (i.e., environment, evaluation, and executor).

(2)Then, we summarize task characteristics, clarify the organization

standards, and construct SOTVerse with 12.56 million frames. Specifically, SOTVerse automatically labels challenging factors per frame, allowing users to generate user-defined spaces efficiently via construction rules.

(3) Besides, SOTVerse provides two mechanisms with new indicators and successfully evaluates trackers under various subtasks.

Personal Evaluation:

Release code. Interesting Innovation. Worth reading.

27.Towards Efficient Training with Negative Samples in Visual Tracking

The paper’s purpose:

(1)Current state-of-the-art (SOTA) methods in visual object tracking often require extensive computational resources and vast amounts of training data, leading to a risk of overfitting.

Contributions:

(1) To handle the negative samples effectively, we adopt a distribution-based head, which modeling the bounding box as distribution of distances to express uncertainty about the target’s location in the presence of negative samples, offering an efficient way to manage the mixed sample training.

(2) Our approach introduces a target-indicating token,encapsulating the target’s precise location within the template image.

Personal Evaluation:

No code. Normal paper.

28. Towards Grand Unification of Object Tracking

The paper’s purpose:

(1)We present a unified method, termed Unicorn, that can simultaneously solve four tracking problems (SOT, MOT, VOS, MOTS) with a single network using the same model parameters.

Contributions:

(1) Unicorn provides a unified solution, adopting the same input, backbone, embedding, and head across all tracking tasks.

Personal Evaluation:

Release code. Nice paper. Worth reading.

29. Tracking through Containers and Occluders in the Wild

The paper’s purpose:

(1)Tracking objects with persistence in cluttered and dynamic environments remains a difficult challenge for computer vision systems

Contributions:

(1) We set up a task where the goal is to, given a video sequence, segment both the projected extent of the target object, as well as the surrounding container or occluder whenever one exists.

(2) To study this task, we create a mixture of synthetic and annotated real datasets to support both supervised learning and structured evaluation of model performance under various forms of task variation, such as moving or nested containment.

Personal Evaluation:

Release code. Nice paper. Worth reading.

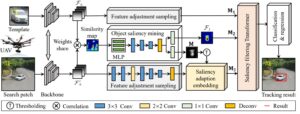

30. SGDViT: Saliency-Guided Dynamic Vision Transformer for UAV Tracking

The paper’s purpose:

(1)However, the dynamic changes in flight maneuver and viewpoint encountered in UAV tracking pose significant difficulties, e.g., aspect ratio change, and scale variation.

(2) The conventional cross-correlation operation, while commonly used, has limitations in effectively capturing perceptual similarity and incorporates extraneous background information.

Contributions:

(1) The proposed method designs a new task-specific object saliency

mining network to refine the cross-correlation operation and

effectively discriminate foreground and background information.

(2) Additionally, a saliency adaptation embedding operation dynamically generates tokens based on initial saliency, thereby

reducing the computational complexity of the Transformer architecture.

(3) Finally, a lightweight saliency filtering Transformer further refines saliency information and increases the focus on appearance information.

Personal Evaluation:

Release code. Normal paper.

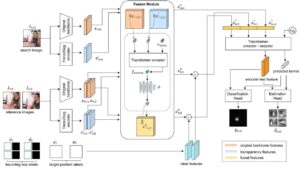

31. Transparent Object Tracking with Enhanced Fusion Module

The paper’s purpose:

(1)Accurate tracking of transparent objects, such as glasses, plays a critical role in many robotic tasks such as robot-assisted living.

(2) Due to the adaptive and often reflective texture of such objects, traditional tracking algorithms that rely on general-purpose learned features suffer from reduced performance.

(3) For example, many of the current days’ transformer-based trackers are fully pre-trained and are sensitive to any latent space perturbations.

Contributions:

(1)Our proposed fusion module, composed of a transformer encoder and an MLP module, leverages key query-based transformations to

embed the transparency information into the tracking pipeline.

(2)We also present a new two-step training strategy for our fusion

module to effectively merge transparency features.

Personal Evaluation:

Release a half code. Normal paper.

32. Video-ChatGPT: Towards Detailed Video Understanding via Large Vision and Language Models

The paper’s purpose:

(1) Despite remarkable progress in image and video recognition via representation learning, current research still focuses on designing specialized networks for singular, homogeneous, or simple combination of tasks.

Contributions:

(1)We design a Curriculum training, Pseudo-labeling, and Fine-tuning (CPF) scheme to successfully train VTDNet on all tasks and

mitigate performance loss.

Personal Evaluation:

Release a half code. Normal paper.

33. Visual Prompt Multi-Modal Tracking

The paper’s purpose:

(1) Trajectory prediction.

Contributions:

(1)ARTrack tackles tracking as a coordinate sequence interpretation task that estimates object trajectories progressively, where the current estimate is induced by previous states and in turn affects subsequences.

(2)This time-autoregressive approach models the sequential evolution of trajectories to keep tracing the object across frames, making it superior to existing template matching based trackers that only consider the per-frame localization accuracy.

Personal Evaluation:

Release code. Worth reading.

34. VideoTrack: Learning to Track Objects via Video Transformer

The paper’s purpose:

(1) Existing Siamese tracking methods, which are built on pair-wise matching between two single frames, heavily rely on additional sophisticated mechanism to exploit temporal information among successive video frames, hindering them from efficiency and industrial deployments.

Contributions:

(1)Specifically, we adapt the standard video transformer architecture to visual tracking by enabling spatiotemporal feature learning directly from frame-level patch sequences.

(2) To better adapt to the tracking task, we carefully blend the spatiotemporal information in the video clips through sequential multi-branch triplet blocks, which formulates a video transformer backbone.

(3) Our experimental study compares different model variants, such as tokenization strategies, hierarchical structures, and video attention schemes.

(4) Then, we propose a disentangled dual-template mechanism that decouples static and dynamic appearance clues over time, and reduces temporal redundancy in video frames.

Personal Evaluation:

No code. Could not understand the paper

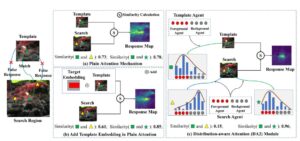

35. Foreground-Background Distribution Modeling Transformer for Visual Object Tracking

The paper’s purpose:

(1) However, the feature learning of these Transformer-based trackers is easily disturbed by complex backgrounds.

Contributions:

(1)To address the above limitations, we propose a novel foreground-background distribution modeling transformer for visual object tracking (FBDMTrack), including a fore-background agent learning

(FBAL) module and a distribution-aware attention (DA2) module in a unified transformer architecture.

(2)The proposed F-BDMTrack enjoys several merits. First, the proposed FBAL module can effectively mine fore-background information with designed fore-background agents.

(3) Second, the DA2 module can suppress the incorrect interaction between foreground and background by modeling fore-background

distribution similarities.

(4) Finally, F-BDMTrack can extract discriminative features under ever-changing tracking scenarios for more accurate target state estimation.

Personal Evaluation:

No code. Could not understand the paper.

36. Representation Learning for Visual Object Tracking by Masked Appearance Transfer

The paper’s purpose:

(1) However, few works study the trackingspecified representation learning method. Most trackers directly use ImageNet pre-trained representations.

Contributions:

(1) However, for the template, we make the decoder reconstruct the target appearance within the search region.

(2) We randomly mask out the inputs, thereby making the learned representations more discriminative.

Personal Evaluation:

Release code. Complex code.

37. ZoomTrack: Target-aware Non-uniform Resizing for Efficient Visual Tracking

The paper’s purpose:

In this paper, we demonstrate that it is possible to narrow or even close this gap while achieving high tracking speed based on the smaller input size.

Contributions:

(1) To this end, we non-uniformly resize the cropped image to have a smaller input size while the resolution of the area where the target is more likely to appear is higher and vice versa.

(2)This enables us to solve the dilemma of attending to a larger visual field while retaining more raw information for the target despite a smaller input size.

(3) Our formulation for the nonuniform resizing can be efficiently solved through quadratic programming (QP) and naturally integrated into most of the crop-based local trackers.

Personal Evaluation:

Release a half code. Normal paper.