This week I am reading some papers about long term object tracking and downloading related tracking datasets. Compared to normal object tracking datasets, the long term object datasets lack sources and are hard to download. Everything needs to start from scratch.

I want to find a accurate method to evaluate whether the tracked target is absent . Most of paper exploit confience score to judge the suituation of tracking performence but I think it is not realiable. The tragectory predictor is expected to correct the tracker. But only one paper adopts this way, using a distribution to guess a location.

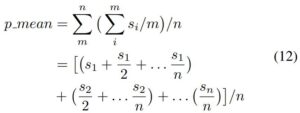

And the metrics of long term tracking are F1-score, Precision and Recall,which differs from single object tracking. But these papers did not test on the same benchmark, which make me diffcult to evaluate the trackers. (The most usual benchmark are VOT2020LT. )

The specific analysis of papers are as follows:

1. Combining complementary trackers for enhanced long-term visual object tracking

The paper’s purpose:

Cmbining the capabilities of baseline trackers in the context of long-term visual object tracking.

Contributions:

(1) Proposing a strategy which can perceive whether the two trackers are following the target object through an online learned deep verification model to select the best performing tracker as well as it corrects their performance when failing.

Personal Evaluation:

The long term tracker usually own the ability to switch the local tracker and global tracker.This paper may propose a novel way.

Details:

There are two trackers to run, namely Stark and SuperDiMP.

Stark can predict a more accurate bounding box.

SuperDiMP can output a more score to judge whether target is lost.

The two trackers are running together and the paper propose a policy to select which tracker is better in the t th frame.

The judgement are decided by confidence score and the verifier.

The verifer are trained offline and updated by image patch online.

Deep evaluation: Very simple design and easy to reproduce. The key technology is a simple deep binary classification. The training data can be collected from training process. But the paper did not provide code and I don’t know if it really work.

The flow chart are as follows:

2. Target-Aware Tracking with Long-term Context Attention

The paper’s purpose:

(1) Unlike siamese tracker, exploiting contextual information.

(2) Coping with large appearance changes, rapid target movement, and attraction from similar objects.

Contributions:

(1) Proposing a LCA (transformer ) which uses the target state from the previous frame to exclude the interference of similar objects and complex backgrounds.

(2) Proposing a concise and efficient online updating approach based on classification confidence to select highquality templates with very low computation burden.

Personal Evaluation:

A normal tracker with new transformer.

Details:

The first contribution rely on the Swin transformer.

The template update mechanisms is key contributions. It exploits the continuous confidence score. The normal mean of T templates are not enough and it use the threshold and penalty.

Deep evalutaions:

Its contributions is too simple and easy to reproce.

The paper provide the code and I should read it.

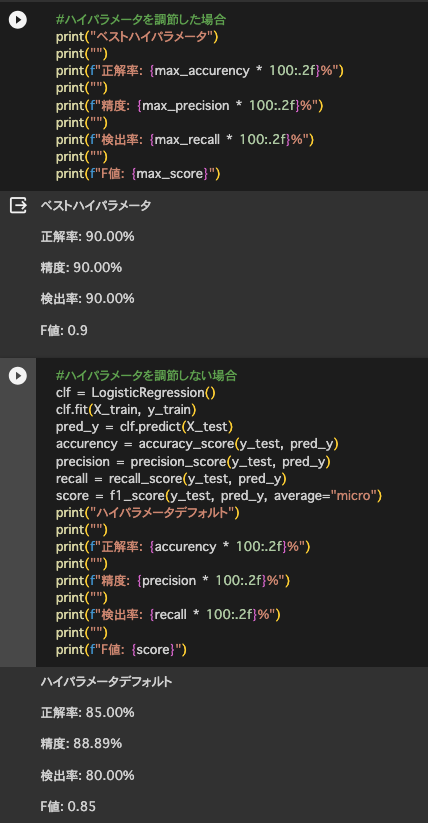

3. Multi-Template Temporal Siamese Network for Long-Term

Object Tracking

The paper’s purpose:

(1) Avoiding defects of siamese tracker.

(2) Coping with target appearance changes and similar objects .

Contributions:

(1) Learning the path history by a bag of templates

(2) Projecting a potential future target location in a next frame.

Personal Evaluation: Competitor! Consensus of ideas. Worth reading.

Details:

Using the confidence score of RPN head to update templates. The first mechanism exploit confidence score and weights to compute the similarity.

The second mechanism is to predict possible bbox but it is a passing. It should be the most important part.

The second mechanism exploit the latent bbox and similarity to compute a reliability score.

Deep evaluation: Normal paper. But it provide the code. So I can read the code the find the part of predicting bbox.

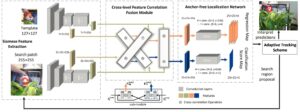

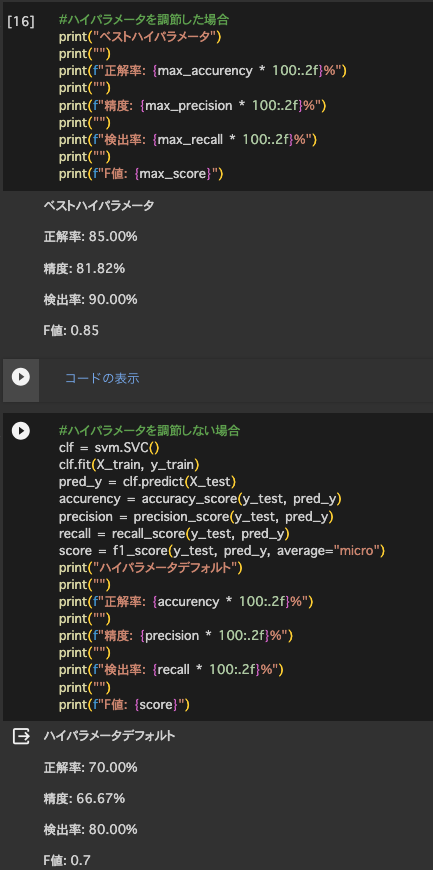

4. SiamX: An Efficient Long-term Tracker Using Cross-level Feature

Correlation and Adaptive Tracking Scheme

The paper’s purpose:

(1) Improving siamese tracker.

(2) large variance, presence of distractors, fast motion, or target disappearing and the like .

Contributions:

(1) Exploiting cross-level Siamese features to learn robust

correlations between the target template and search regions.

(2) Proposing inference strategies to prevent tracking loss

and realize fast target re-localization.

Personal Evaluation: Normal. I want to know how to re-locate. Worth reading.

Details: The key contributions is to compute the probability

distribution of the target and maintain a heat-map. The heatmap is only used to judge if the target is loss. I dont know how to search the most possible region.

Deep evalutions: No code. The key contributions seems not reliable.

5. ‘Skimming-Perusal’ Tracking: A Framework for Real-Time and Robust Long-term Tracking

The paper’s purpose:

(1) Traditional long-term tracker are limited.

(2) To find the missing target object and accerlate the speed of global search.

Contributions:

(1) Determining whether the tracked object being present or absent, and then chooses the tracking strategies of local search or global search respectively in the next frame.

(2) Speeding up the image-wide global search,

a novel skimming module is designed to efficiently choose

the most possible regions from a large number of sliding

windows.

Personal Evaluation: The global search is useful. Worth reading.

Details: The key contributions is that the skimming global seach.

Another contributions is to judge whether the target is loss.

Its approach expoits the similarity between template and candidate bboxes to judge whether the target is loss.

Deep evalution: The judge mechanism is rough but efficient. It provide the code. I want to check the skimming global search.