(1)Recently, I have been reading papers on trajectory prediction, conducting experiments, and trying to understand the code.

今週の進捗(藤本)

今週の進捗(藤崎)

ソニーセミコン応募

今週の進捗(根来)

先週に引き続きgoproで撮影してました。

セグメンテーションの勉強等を行っていこうと思います。

週報(TANG)

read paper Ma X, Dai X, Bai Y, et al. Rewrite the Stars[J]. arXiv preprint arXiv:2403.19967, 2024.

暂定论文开题题目:高分辨率图像二类分割方法研究及轻量化实现

週報_KAN(QI ZIYANG)

Kolmogorov–Arnold Networks

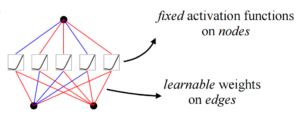

MLP (Multi-Layer Perception) is essentially a linear model wrapped with a layer of nonlinear activation functions to realize nonlinear spatial transformations. The advantage of a linear model is its simplicity, as each edge is two parameters w and b, which together are represented as a vector matrix, W. As the number of layers increases, the representation capability of the model increases.

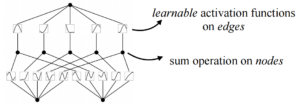

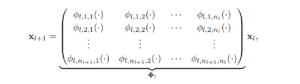

C=6ND.

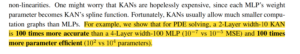

Comparing MLPs and KANs, the biggest difference is the change from fixed nonlinear activation + linear parameter learning to direct learning of parameterized nonlinear activation functions. Because of the complexity of the parameters themselves, it is clear that individual spline functions are harder to learn than linear functions, but KANS typically only require a smaller network size to achieve the same effect.

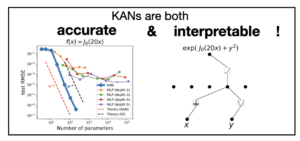

The paper shows us that even very few network nodes can achieve the same or even better results.

Fewer network nodes simplify the structure of the network, and thus the paper lends this to emphasize the interpretability of KAN networks.

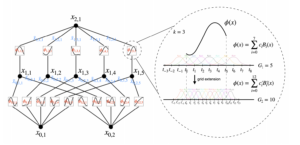

KAN structure

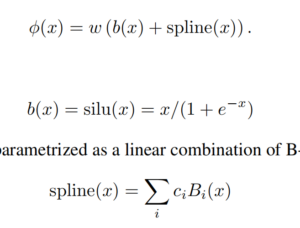

Many three-quarter period sine functions can be combined to fit functions of arbitrary shape. In other words, two summations with B-spline, an activation function, are enough.

The number of layers and nodes of the KAN network are controllable and can be chosen arbitrarily:

Residual activation functions:

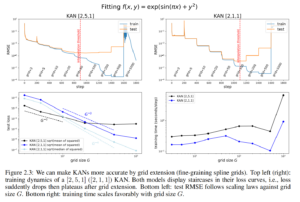

KAN accuracies

For KANs, one can first train a KAN with fewer parameters and then extend it to a KAN with more parameters by simply making its spline grids finer (???), without the need to retraining the larger model from scratch.

週報(鄭)

いくつ就職セミナー参加しました、今週もインターンシップの説明会あるので、参加しようと思います。

日本語を勉強しました。

週報(中本)

企業も絞れずESもまだ書けませんでした。今週こそは決めて書こうと思います。ミネベアミツミのセミナーに参加しました。

エントリーやESの研究の欄は、高専の時の研究を書けばいいですか?それともテーマだけでも決めたほうがいいですか?

学生プロジェクトに参加して、画面表示のviewを作成しています。

週報(飯田)

先週はソフトバンクのESを出した。日立やソニーもエントリーしようと考えている。

SPIの勉強もぼちぼち始めたい。

週報(西元)

ソフトバンクのESを提出しました。今週はES作成とSPIの勉強をします。

smart_projectのフロントは完成したので、バックのほうの勉強をしようと思います。